Unveiling the Misleading Nature of AI Crash Alerts

Unveiling the Misleading Nature of AI Crash Alerts

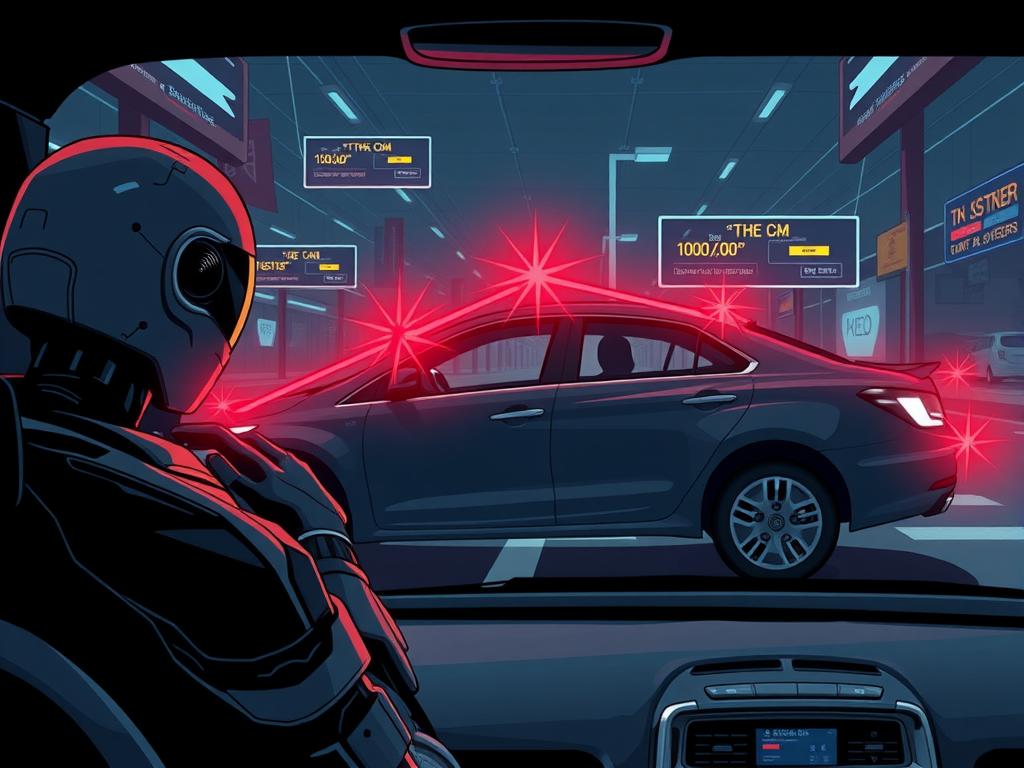

When it comes to AI crash alerts, it's important to understand that not all alerts are created equal. While these alerts can provide valuable insights and help prevent accidents on the road, there are instances where false positives can lead to unnecessary panic and confusion. One of the main issues with AI crash alerts is the misleading nature of some notifications, which can be triggered by harmless events or objects that pose no real threat to drivers.

For example, AI systems may misinterpret a plastic bag blowing across the road as a potential collision hazard, triggering a crash alert and causing drivers to take evasive action when it's not needed. This can not only lead to unnecessary stress for drivers but also increase the risk of accidents by creating distractions on the road. It's essential for drivers to be aware of the limitations of AI crash alerts and to exercise caution when responding to these notifications.

Exposing the Inaccuracies in AI Crash Alert Systems

As humans, we rely on technology to keep us safe on the road. However, there are instances where AI crash alert systems may not be as accurate as we think. These systems are designed to detect potential crashes and warn drivers, but they can sometimes give false alerts. This can be due to a variety of factors, such as poor visibility, sudden movements, or even glitches in the system itself. It is important to be aware of these inaccuracies to avoid unnecessary panic or distraction while driving.

One of the main issues with AI crash alert systems is their tendency to produce false positives. This means that the system may mistakenly detect a crash when there is no actual danger present. This can be frustrating for drivers, as it can lead to unnecessary braking or swerving, which can potentially cause accidents. It is crucial to understand the limitations of these systems and not rely solely on them for safety on the road.

Another factor that can contribute to inaccuracies in AI crash alerts is the environment in which the system is operating. For example, poor lighting conditions or inclement weather can impact the system's ability to accurately detect potential crashes. Additionally, sudden movements or changes in traffic patterns can confuse the system and trigger false alerts. It is important for drivers to remain vigilant and not solely rely on these systems to keep them safe.

In conclusion, while AI crash alert systems can be a useful tool for enhancing road safety, they are not infallible. It is essential for drivers to be aware of the potential inaccuracies of these systems and to use them as a supplemental aid, rather than a replacement for attentive driving. By understanding the limitations of AI crash alerts, we can ensure a safer and more informed driving experience for all.

The Pitfalls of Over-Reliance on AI for Crash Alerts

Have you ever experienced the frustration of receiving a false crash alert from your AI system? While Artificial Intelligence has undoubtedly improved our lives in many ways, there are pitfalls to over-reliance on AI for crash alerts. One of the main drawbacks is the potential for false positives, which can lead to unnecessary stress and wasted time for users.

AI systems are designed to detect patterns and anomalies in data to predict potential crashes. However, they are not foolproof and can sometimes misinterpret data, leading to false alerts. This can be particularly problematic in high-pressure situations where quick decision-making is crucial.

Another issue with relying too heavily on AI for crash alerts is the risk of becoming complacent. Users may start to trust the system blindly, without questioning its accuracy or limitations. This blind trust can be dangerous, as it can lead to a false sense of security and a lack of vigilance on the part of the user.

Overall, while AI technology has the potential to greatly improve crash alert systems, it is important to remember that it is not infallible. Users should always remain vigilant and not rely solely on AI for critical decision-making. By understanding the limitations of AI and using it as a tool rather than a crutch, we can ensure a safer and more reliable crash alert system for all.

Frequently Asked Question

The False Positives of AI Crash Alerts

One common issue with AI crash alerts is the occurrence of false positives. False positives happen when the system incorrectly identifies a situation as a crash, leading to unnecessary alarm and disruption. While AI algorithms are designed to detect patterns and anomalies, they may sometimes misinterpret normal driving behaviors as potential crashes. It is important for developers to fine-tune the algorithms to reduce the number of false positives and improve the overall accuracy of crash alerts.

Impact of False Positives on User Experience

False positives can have a significant impact on user experience, causing frustration and distrust in the AI crash alert system. When drivers receive frequent false alarms, they may become desensitized to the alerts or even disable the system altogether. This can compromise the safety benefits of AI crash alerts, as users may ignore genuine warnings due to previous false alarms. Developers need to address false positives to ensure a positive user experience and encourage continued use of AI crash alert technology.

Challenges in Minimizing False Positives

Minimizing false positives in AI crash alerts poses several challenges for developers. One challenge is balancing sensitivity and specificity in the algorithm, as increasing sensitivity to detect more crashes may also lead to more false alarms. Additionally, the diversity of driving behaviors and road conditions makes it difficult to create a one-size-fits-all solution. Developers need to continuously refine and adapt the algorithms to account for these variations.